Sunday, 26. January 2025

My Most Complex Project So Far

The feeling I have when finishing a project is always a bit weird. On one hand, I'm happy that I got everything wrapped up. On the other, I feel a strange mix of emptiness and anxiety. This week, I finished a project at work that I think is pretty interesting from a technical perspective, at least for me as a junior developer.

What The Project Is About

At the company I work for, I’m part of the "AI-Lab." The company offers services like SEO, SEA, E-Commerce, web development, and web design. The CEO wants to prepare the company for disruptions that might come with the rise of AI. To do this, he set the goal to develop one AI-related project every three months. The "AI-Lab" was created the same month I started, and I was thrown straight into it. Since then, I’ve developed the backend for three projects, and for one, I handled both frontend and backend.

The latest project I finished this week is an AI-powered chatbot. The chatbot helps users find information about the company and connects them with a contact person if needed. The idea is that when someone asks a question, the bot searches the company website for the relevant information and answers the query. My boss requested features like lead generation, spam detection, chat history, Slack and Hubspot integration, and auto-generated summaries of the chat history. I was responsible for around 80% of the backend.

The Stack

The company typically uses PHP/Laravel for the backend. But since we also want to explore AI, there was no way around using Python. The only issue was that nobody at the company had prior experience with Python. We decided that this project would be a good starting point. Since there was already enough to learn with Python basics and AI-related tasks, and we had a tight deadline, we chose a hybrid approach. The business logic would be handled in Laravel, and everything related to AI would be handled in Python/Langchain. For the frontend, we used a component library to speed up development, but I didn’t work much on that.

A big issue with LLMs (Large Language Models) is that they can hallucinate. Sometimes, they come up with things they "invented" on their own. For example, I once gave ChatGPT a list of domain names with prices and asked which one would fit my use case. A few times, ChatGPT suggested domain names and prices that weren’t in my list.

LLM (Large Language Model): A machine learning model trained on vast amounts of text data to generate human-like text based on the input provided.

This is a problem, especially if the bot represents a company. Sensitive questions, like the cost of developing a website, should not be answered by an LLM. To address this, we added a layer between Laravel and Langchain. We used Rasa, an open-source framework for building chatbots, to handle sensitive questions. These are answered with predefined responses. Normally, these questions and answers are defined in config files, which the Rasa model is trained with. To make this adjustable, I built an admin section in Laravel where users can create, edit, or delete questions and answers. The data is sent to a Rasa microservice, which updates the config files, retrains the model, and restarts the service.

So, to summarize:

- We used a frontend component library for the chatbot messages.

- Laravel handled business logic like storing conversations and deciding which microservice to call.

- Three microservices were used:

- A Rasa chatbot model for predefined questions and answers.

- An API for updating the Rasa chatbot with Python.

- A RAG (Retrieval Augmented Generation) system built with Langchain.

RAG (Retrieval Augmented Generation): A method that enhances a language model by first retrieving relevant information from a database, which is then used to generate more accurate answers.

Since the Rasa part is fairly simple and doesn’t present major technical challenges, I won’t go into detail about that. The API I built for it is also straightforward: a single endpoint that runs some bash scripts when triggered. What I think is interesting is the business logic in Laravel and the RAG system.

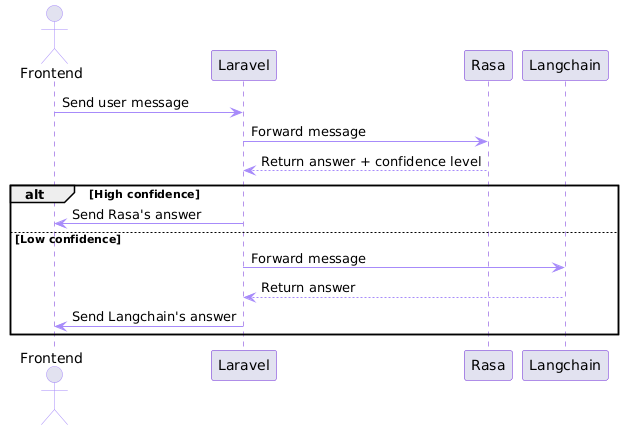

Deciding Which Microservice To Call

I won’t go into all the details of the Laravel business logic here. It would make this article too long, and I’m also not allowed to. But one thing I want to discuss is the logic behind deciding when to call the RAG system and when to call the Rasa system.

Here’s the approach I used: Laravel always calls the Rasa microservice first. The microservice returns two things:

- The best fitting answer for the user’s question or message.

- The confidence level with which it chose that answer.

If the message matches a predefined example, the confidence will be high. If it doesn’t, the Rasa model will still try to find an answer, but the confidence level will be lower. The logic in Laravel compares the confidence value to a threshold, which can be set in the admin section. If the confidence is too low, Laravel doesn’t use the answer from Rasa and instead calls the RAG system.

RAG System

Quick disclaimer: the RAG system itself is not groundbreaking. But I think it’s worth mentioning because not everyone may be familiar with it.

First, I set up a vector database and fed it documents from the company’s website. The documents need to be within a certain length range for RAG to work efficiently. This length depends on the model used to convert the text into vectors. There are various embedding models that you can either run locally or call via API. Langchain has built-in functions for splitting documents into appropriate lengths. The reason for transforming these texts into vectors is that comparing numbers is faster than comparing strings.

When a request comes in, the system converts the request message into a vector and performs a similarity search. This compares the user’s message with the documents in the vector database. The system returns the most relevant documents, which, along with the user’s message, are sent to an LLM to generate an answer. We also added a custom prompt to make the LLM behave like a chatbot.

Conclusion

I built nearly the entire backend myself. Of course, a senior developer reviewed my merge requests and implemented the functionality to fetch website data regularly via API. But aside from that, I handled the project. I came up with the layered structure and implemented the APIs needed to connect the services.

For some, this may still be a relatively simple project, but for me, it was quite complex. I’m proud that I managed to make it work. I’m not an expert in RAG or advanced AI, so there’s still room for improvement. Once feedback comes in, I’m sure there will be plenty to work on.

But I’m looking forward to it. I learned a lot during this project, from Python and Langchain basics to Rasa. I also improved my API-building skills.

I’m curious to see what my next professional project will look like.

Thanks for reading, and happy coding!

Comments

Login or Register to write comments and like posts.